Source: Vector Choice- URS Preferred Partner

We’ve all been there: You have a deadline looming, a mountain of data to summarize, or a stubborn bug in your code. Then you remember that ChatGPT or Claude can solve it in thirty seconds.

You copy, you paste, and—poof—problem solved.

But for security teams, that "poof" is the sound of proprietary data vanishing into a black box. This is the world of Shadow AI, and it’s moving much faster than your traditional IT policies.

What Exactly is Shadow AI?

Think of Shadow AI as the rebellious younger sibling of Shadow IT. While Shadow IT involves using unauthorized project management tools or file-sharing sites, Shadow AI is deeper. It involves employees using unapproved AI platforms—think Gemini, Midjourney, or even AI browser extensions—to process and generate company content.

The difference? Traditional Shadow IT stores your data; Shadow AI consumes it.

The Hidden Costs of "Quick Wins"

When your team goes "underground" with AI, the risks aren't just theoretical. They’re immediate:

Data Leakage: That "proprietary code" being debugged? It’s now potentially training the next generation of a public model.

The Compliance Gap: Regulations like GDPR, HIPAA, and PCI DSS don’t take "but it made me faster" as an excuse for an audit trail gap.

The Hallucination Factor: If an employee uses an unverified AI to draft a financial report and the AI "hallucinates" a statistic, the company is on the hook for the fallout.

The Reality Check: Most employees aren't trying to be malicious. They’re just trying to be productive. Shadow AI is a systems problem, not a behavioral one.

How to Bring AI Out of the Shadows

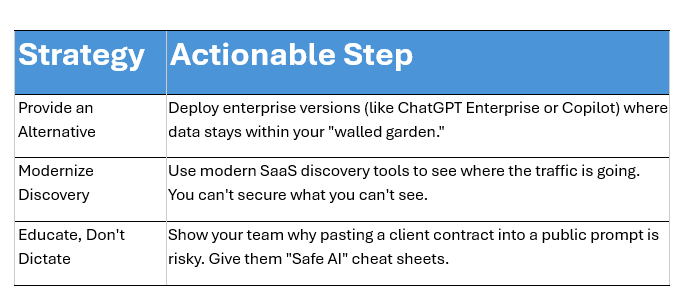

A blanket ban is like trying to stop the tide with a bucket—it won’t work, and it’ll just make your team hide their usage better. Instead, try these three strategies:

The Bottom Line

Shadow AI is an indicator of a workforce that is hungry for innovation. Organizations that win won't be the ones that ban AI, but the ones that build a secure bridge for their employees to use it.

The window for being proactive is closing. It’s time to turn on the lights.

Are you worried about how AI is being used in your department? We can help you draft a simple "Acceptable Use Policy" for AI to get your team started on the right track.

👉 Request your customized cyber vulnerability report today and stay ahead of threats.

👉 Gain insights into your unique cybersecurity vulnerabilities with a custom report.

👉 Train your team to be your first line of defense

📞 Schedule a call today or 📧 contact us for a consultation.